Here is another contribution by Doug Fraedrich. This time, the text would probably better fit on theepxerimentaldiver.org: He tests dive computers No-Fly-Times algorithms and compares those to DAN’s recommendations and the respective user manuals. For some more theoretical considerations regarding diving and flying, see this older post.

Introduction

It is well known that divers must wait a certain time after diving before then can safely fly. The current No Fly Time (NFT) “best practices” guidelines from Divers Alert Network (DAN)1 are listed below:

- For a single no-decompression dive, a minimum preflight surface interval of 12 hours is suggested.

- For multiple dives per day or multiple days of diving, a minimum preflight surface interval of 18 hours is suggested.

- For dives requiring decompression stops, there is little evidence on which to base a recommendation and a preflight surface interval substantially longer than 18 hours appears prudent

These guidelines are primarily based on a paper by Richard D. Vann, et al2 of Divers Alert Network, which reported an analysis of risk of Decompression Sickness (DCS) results of 802 dives of nine different profiles: four single non-deco dives and five sets of repetitive dives. DAN did not test decompression dives in Reference 2, so the US Navy No Fly Times were used for this category of dives.

Many commercial off-the-shelf dive computers compute and display this time after surfacing from a dive. The level of documentation on how exactly this is computed varies from dive computer to dive computer; most units state in their manual that they use the DAN guidelines.

The objective of this report is to test several representative commercial off-the-shelf dive computers in pressure chamber which simulates different categories of dives and assess the following: 1) does each computer conform to the DAN NFT guidelines? and 2) does each computer conform to what is described in the computer’s owner’s manual? The dive computers tested are shown in Figure 1 and listed in Table 1.

| Computer | Main Dive Algorithm | No Fly Time computations (as stated in User Manual) |

| Deepblu Cosmiq+ | Buhlmann ZHL-16c | The No-Fly Time is based on the calculations of your desaturation time according to your actual dive profile. The No-Fly Time will be counted downwards every half hour. |

| Suunto EON Steel | 15-tissue Suunto-Fused | No-fly time is always at least 12 hours and equals desaturation time when it is more than 12 hours. If decompression is omitted during a dive so that Suunto EON Steel enters permanent error mode, the no-fly time is always 48 hours. |

| Oceanic VT4.0 | Z+/DSAT | FLT Time is a count down timer that begins counting down 10 minutes after surfacing from a dive from 23:50 to 0:00 (hr:min) |

| Mares Icon | 10 tissue RGBM | Icon HD employs, as recommended by NOAA , DAN and other agencies, a standard 12-hour (no-deco non-repetitive dives) or 24-hour (deco and repetitive dives) countdown. |

| Cressi Cartesio | “Cressi” 9 -tissue RGBM | The no-fly times as follows: 12 hours after a single dive within the safety curve (without decompression). 24 hours after a dive outside the safety curve (with decompression) or repeated or multi-day dives performed correctly. 48 hours…if severe errors have been made during the dive. |

| SEAC Guru | Buhlmann ZHL-16B | For single dives that did not require mandatory deco stops, wait a minimum interval of 12 hours on the surface. In the event of multiple dives in a single day, or multiple consecutive days with dives, wait a minimum interval of 18 hours. For dives that required mandatory deco stops, wait a minimum interval of 24 hours. |

| AquaLung i300C | Z+ | The Time to Fly countdown shall begin counting from 23:50 to 0:00 (hr:min), 10 minutes after surfacing from a dive. |

| Garmin Descent MK1 | Buhlmann ZHL-16c | After a dive that violates the decompression plan, the NFT is set to 48 hours. (Note: No mention of NFT after a nominal dive.) |

Methods

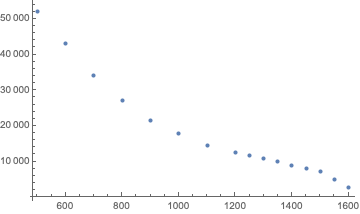

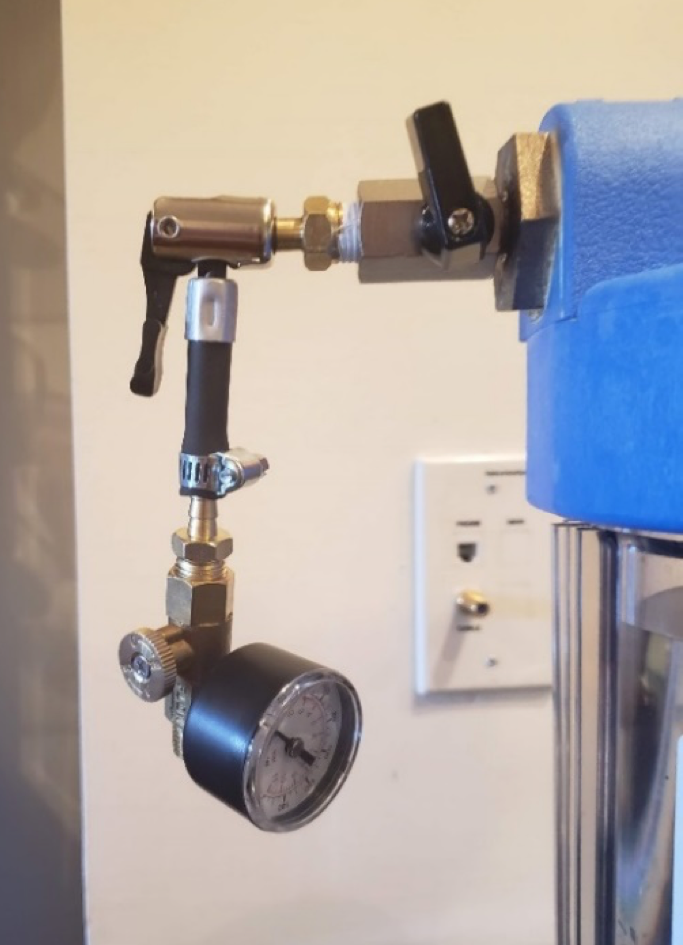

The dive computers were subjected to simulated dives in a small pressure chamber, Figure 2. This chamber was a Pentair Pentek Big Blue 25cm chamber that had a maximum allowable pressure of 6.9 bar.

The units-under-test are placed in the chamber with the display facing out (as illustrated in Figure 2) and filled with fresh tap water. Air pressure is added from the top of the chamber via a standard bicycle pump (Velowurks Element floor pump.) Even though the relationship between pressure and indicated depth is well-known, several “calibration runs” were performed before the testing to verify repeatabilty of calibration. For these calibration runs, a Shearwater Perdix dive computer was used.

Pressure was released in a controllable way via an added vent valve. This ensured that the simulated ascent rates were not too fast. Figure 3.

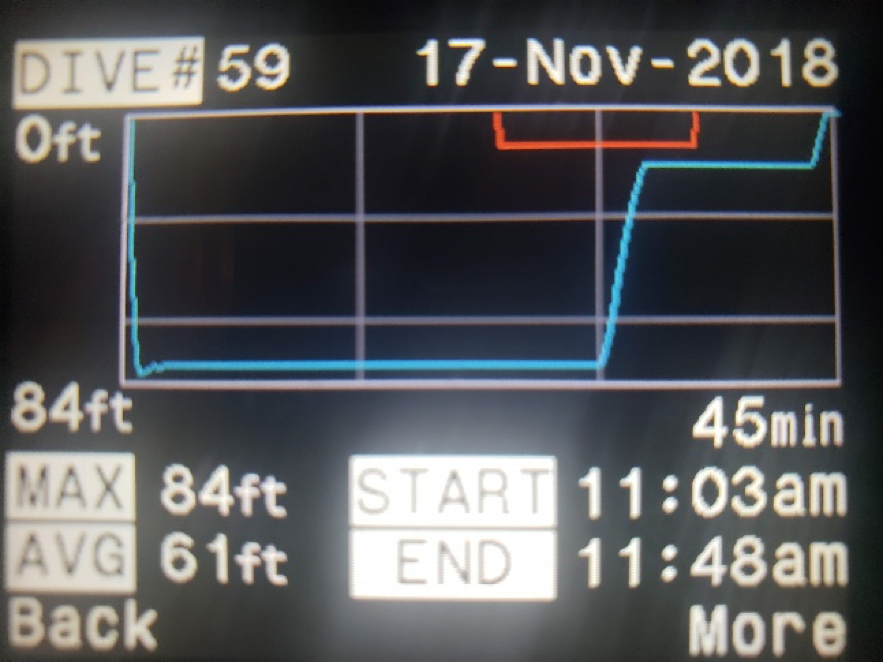

Figure 4 shows an actual profile using the test chamber.

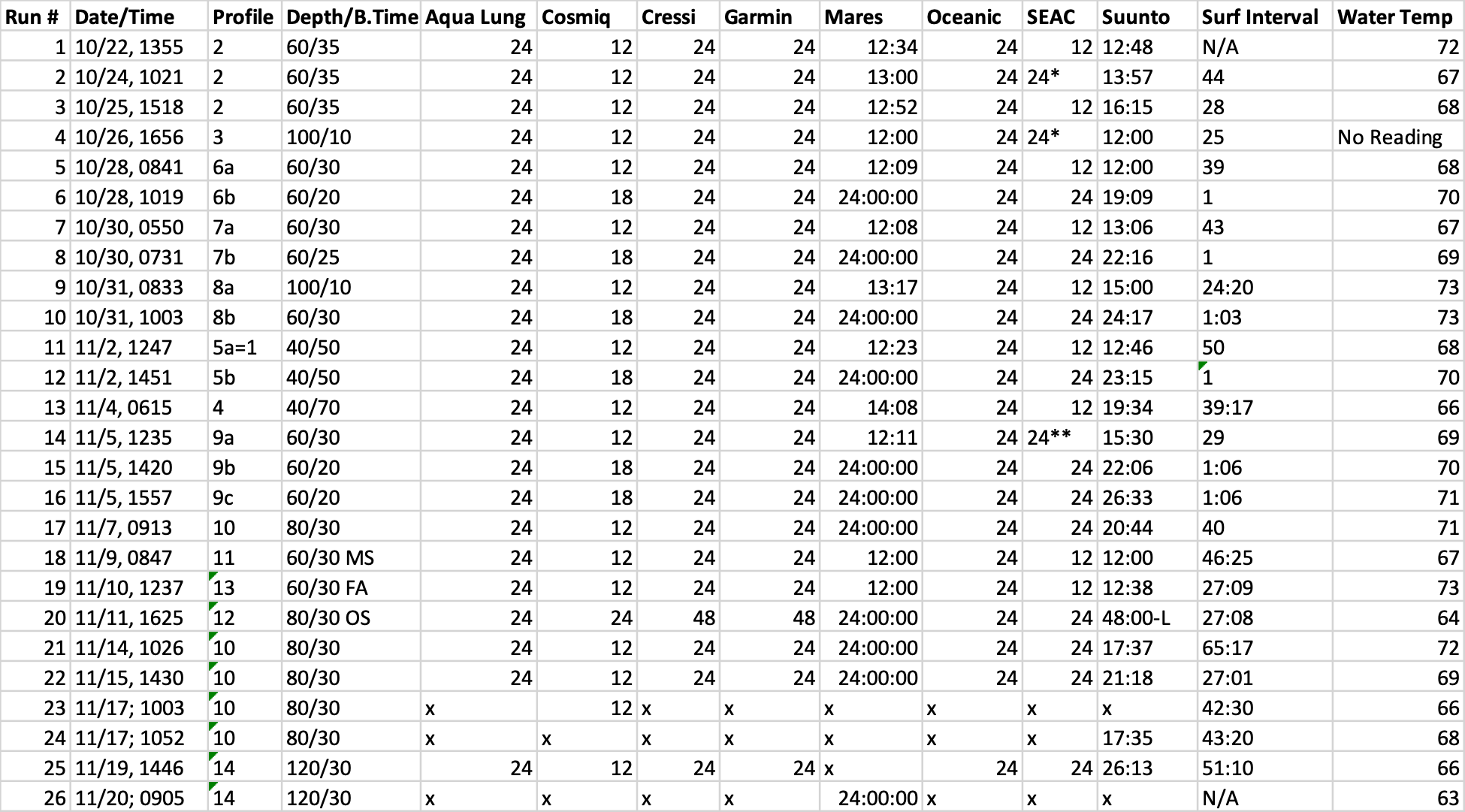

A run list was prepared based on the dives described in Reference 2; see run list in Table 2.

| Category | Profiles – Number: Depth/Bottom Time |

| Single Dives | #1: 12m/50 min |

| #2: 18m/35mins | |

| #3: 30m/10 mins | |

| #4: 12m/70 mins | |

| Repetitive Dives | #5: 12m /50mins -1hrSI 12m/50min |

| #6: 18m /30 mins 1hr SI, 18m /20mins | |

| #7: 18m /30 mins 1hr SI, 18m /30mins | |

| #8: 30m/10min 1hr SI, 18m /30 mins | |

| #9: 18m /30mins 1hr SI 18m /20min, 1hr SI, 18m /20min | |

| Deco Dives: | #10 24m/30 mins with all required deco stops: Group H (11:04) |

| #14 37m/30 with all required deco stops: Group L (16:50) | |

| “Violation” Dives | #11 18m /30mins no-deco dive with missed safety stop |

| #12 24m/30 mins with omitted (all) deco stops | |

| #13 18m /30 ft w/ fast ascent ( ~15m/min) w/ 3 min safety stop |

Some of the dive times needed to be shortened, compared to the original dives in Reference 2; this is because the bottom times in the “old” no-deco dives exceeded the No Decompression Limits on some of the computers tested. Since decompression dives were not tested in the DAN study in Reference 2, two dives profiles were added to the test matrix: a “marginal” deco dive, profile #10 (US Navy Repetitive group H) and a deeper, more stressing deco dive, Profile 14 (US Navy Repetitive Group L).3 US Navy recommended No Fly Times are listed in the table. The specific bottom time for the marginal deco dive was chosen by what was required to make the most conservative of all the computers go into decompression mode. The last category of “Violation Dives” was added to test claims made in some of the manufacture’s User Manuals. The dive profiles in Table 2 were simulated and the displayed No Fly Time from each computer was recorded. The battery charge level was monitored for each computer and kept at acceptable levels. With the exception of the repetitive dives with a 1 hour surface interval, the interval between consecutive runs was the maximum of: 24 hours, desaturation time and the longest displayed NFT from any computer.

Results

A total of 26 runs were performed in this study; the raw results are shown in Table A1 in the Appendix. To start off the testing, Profile #2 was replicated several times to assess that the results were repeatable. For all of the runs, the water temperature varied from17C to 23C; when repetitive dives were simulated, the water was not completely replenished with new tap water, so the second (and third) dives of repetitive sequences tended to have a water temperature about 1-2 C warmer than the first. The ascent rate was monitored using several different dive computers, but the SEAC Guru displayed numerical values with units of ft/min; ascent rates were constantly monitored and were typically in the range of 5-15ft/min (1.5 -5 m/min).

The results from Appendix A1 are summarized in Table 3. Based on this data, each of the eight dive computers can be assessed as to whether they are compliant with the DAN guidelines and if they actually compute what is described in their manuals.

| Computer | Single Dive | Repetitive Dive | Deco Dive | “Violation Dives” |

| Deepblu Cosmiq+ | 12:00 | 18:00 | Grp H: 12:00Grp L: 12:00 | Missed Safety Stop: 12:00Omitted Deco Stops: 24:00Fast Ascent: 12:00 |

| Suunto EON Steel | 12:00- 19:34* | 19:09-24:17 | Grp H: 17:35-21:18Grp L: 26:13 | Missed Safety Stop: 12:00Omitted Deco Stops: 48:00 (locked)Fast Ascent:12:38 |

| Oceanic VT4.0 | 24:00 | 24:00 | Grp H: 24:00Grp L: 24:00 | Missed Safety Stop: 24:00Omitted Deco Stops: 24:00Fast Ascent: 24:00 |

| Mares Icon | 12:00- 14:08 | 24:00 | Grp H: 24:00Grp L: 24:00 | Missed Safety Stop: 12:00Omitted Deco Stops: 24:00Fast Ascent: 12:00 |

| Cressi Cartesio | 24:00 | 24:00 | Grp H: 24:00Grp L: 24:00 | Missed Safety Stop: 24:00Omitted Deco Stops: 48:00Fast Ascent: 24:00 |

| SEAC Guru | 12:00 | 24:00 | Grp H: 24:00Grp L: 24:00 | Missed Safety Stop: 12:00Omitted Deco Stops: 24:00Fast Ascent: 12:00 |

| AquaLung i300C | 24:00 | 24:00 | Grp H: 24:00Grp L: 24:00 | Missed Safety Stop: 24:00Omitted Deco Stops: 24:00Fast Ascent: 24:00 |

| Garmin Descent MK1 | 24:00 | 24:00 | Grp H: 24:00Grp L: 24:00 | Missed Safety Stop: 24:00Omitted Deco Stops: 48:00Fast Ascent: 24:00 |

Discussion

Single Dives:

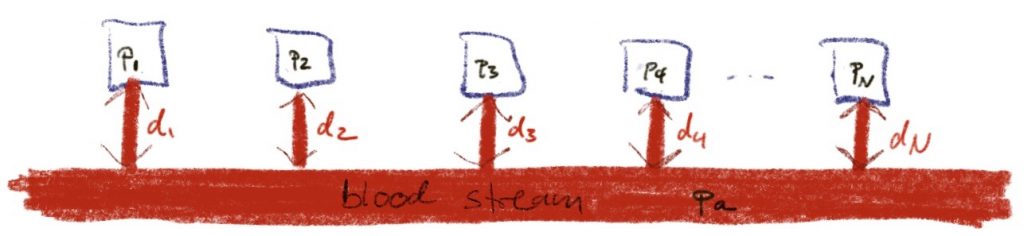

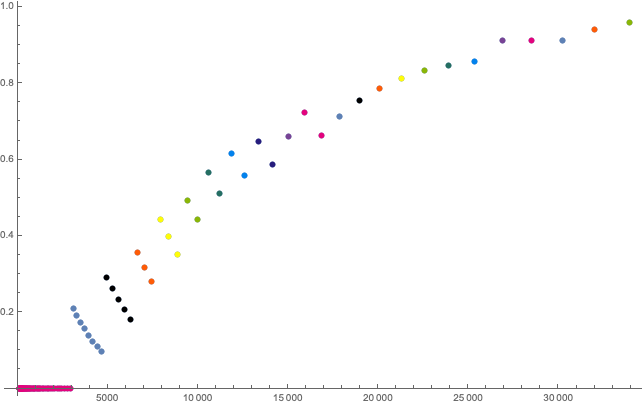

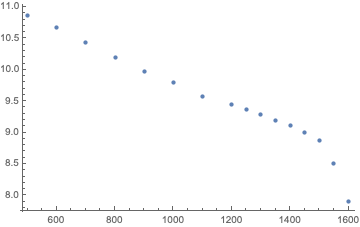

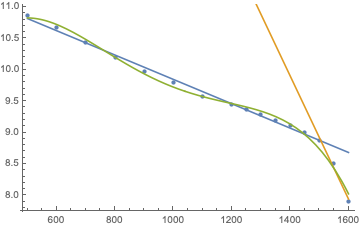

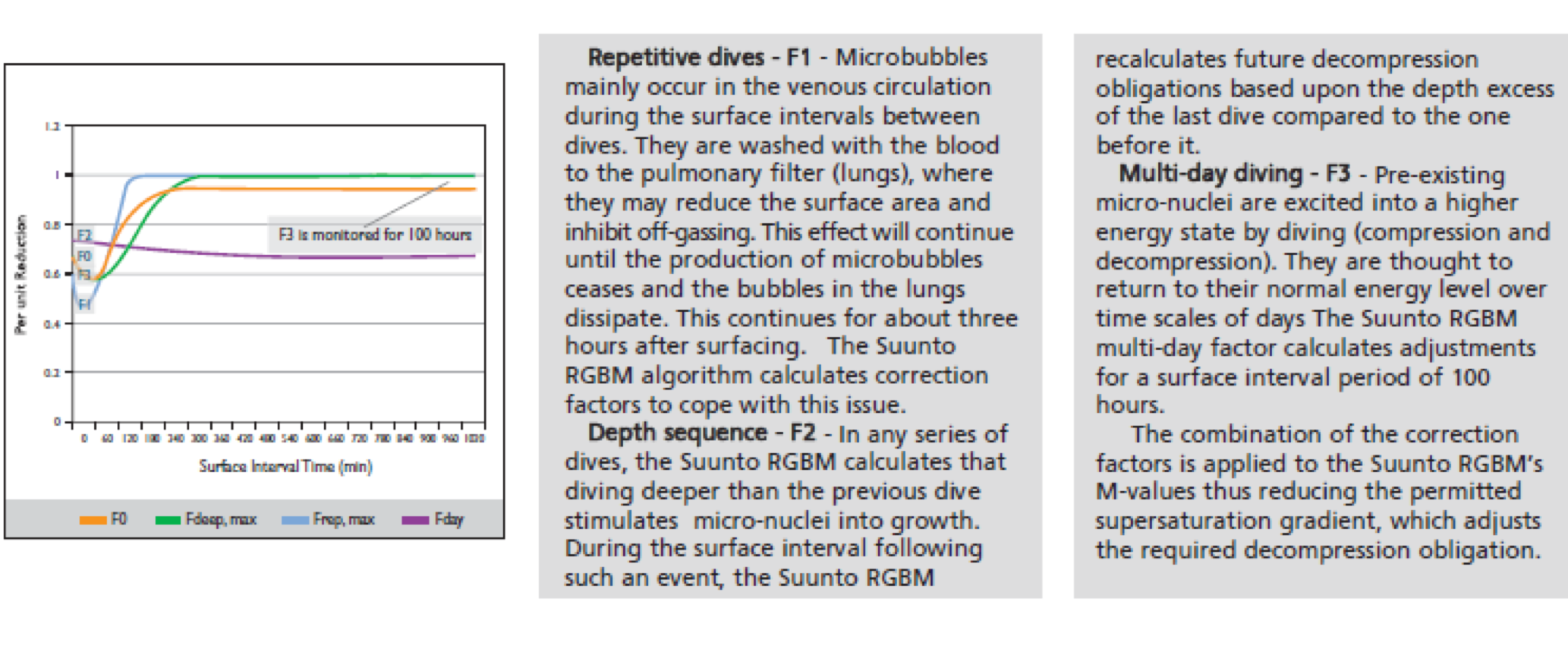

All of the computers were compliant with the DAN guidelines for this type of dive. It was observed that both Mares and Suunto increased their NFT after the exact same single dive profile (#2) separated by a surface interval of ~ 44 hours. There is little documentation on the Mares RGBM variant, but the Sunnto variants are known to factor in the effects of multi-day diving in bubble growth (and presumably desaturation) calculations. This multi-day factor, known as “F3”, persists for up to 100 hours4, Figure 4. Multi-day diving is not covered in the DAN NFT guidelines.

Repetitive Dives:

All of the computers were compliant with the DAN guidelines for this type of dive. For the Suunto EON Steel, since this computer based Repetitive NFT on desaturation times, the reported NFT depended on the exact nature of the repetitive dives and presumably the dives in the previous 100 hours.

Decompression Dives:

Two computers registered an NFT of less than 18 hours for the Group H deco dive: Deepblu Cosmiq+ required only 12 hours of NFT, and the Suunto EON Steel required a range of NFT from 17:35 to 21:18, (depending on previous dives and surface interval.) Note that in both User Manuals, they stated that NFT’s were based on internally-computed De-Sat times and not the DAN guidelines. The Group L dive required significant total decompression time (~60 mins): all of the computers registered the same NFT as the Group H dive, except the Suunto EON Steel which increased the required NFT to 26:13 which is above the DAN guideline. It should be noted that all computers registered NFT’s that exceeded that suggested by the US Navy for Repetitive group H3, however the Deepblue Cosmiq+ would fall short of the US Navy recommendation if used for deco dive having repetitive group I or more.

Violation Dives:

Some computers mentioned in their User Manual that they added an extra NFT penalty for ascent rate violations. Different computers defined “fast ascent violation” differently, so a target ascent rate of 50ft/min was used in Profile #13, which qualified as a fast ascent for all computers. Profile #13 was executed and the actual ascent rate varied from 9-15 m/min during the ascent from 18 to 5m, which took about one minute. A three minute safety stop at 5m was performed. None of the computers seemed to adjust the NFT, based on a comparison to similar dives with a nominal ascent rate, Profiles 2, 6a, 7a, 9a. Alarms did go off during the fast ascent from several computers. The Safety Stop may have been sufficient to satisfy all of each computers’ Dive Algorithms’ safety requirements. Similarly, none of the computers added an extra NFT penalty for dive Profile #11, which was a no-deco dive, with nominal ascent rate but missed safety stop.

For omitted stops on a deco dive (Run # 20, Profile 12), four computers added additional NFT time, compared to the same profile deco dives with all the required stops (Run # 17 profile 10.) See Table 4.

| Computer | NFT for Deco Dive 10 w/ Stops | NFT for Deco Dive 10 w/o Stops |

| Deepblu Cosmiq+ | 12:00 | 24:00 |

| Suunto EON Steel | 17:35 – 20:44 | 48:00 (Locked) |

| Cressi Cartesio | 24:00 | 48:00 |

| Garmin Descent MK1 | 24:00 | 48:00 |

User Manual Discrepancies:

It was noted that several computers did not conform with the NFT rules stated in their User Manual; see Table 5.

| Dive Computer | User Manual Discrepancy |

| Mares Icon | For single dives, it displays the greater of 12 hours or the De-SAT time |

| Cressi Cartesio | Displays 24 hour NFT for all dives including no-deco dives with safety stop |

| SEAC Guru | Displays 24 hour NFT for repetitive dives |

| Garmin Descent MK1 | Displays 24 hour NFT for all no-deco dives (omitted in manual) |

Summary

Eight commercial dive computers were tested in a small pressure chamber to assess their computation and display of No Fly Times. All of the dive computers were found to be generally compliant with the standard DAN guidelines with a few exceptions. Several computers used a very simple rule for NFT: Garmin, Aqualung, Oceanic and Cressi simply displayed 24 hours NFT for all nominal dives (both no-deco and decompression). Some computers added features that handled cases not covered in the DAN guidelines, e.g. multi-day diving (Mares and Suunto) and certain decompression guidance violations such as omitted decompression stops (Garmin, Suunto, Cressi and DeepBlu).

References

- https://www.diversalertnetwork.org/medical/faq/Flying_After_Diving

- Richard D. Vann, et al “Experimental trials to assess the risks of decompression sickness in flying after diving” Journal of Undersea and Hyperbaric Medicine, 2004

- US Navy Diving Manual, Version 7, 2016, Tables 9-7 and 9-6.

- Suunto Reduced Gradient Bubble Model. June 2003. Available from: http://www.dive-tech.co.uk/resources/suunto-rgbm.pdf. [cited 2017 August 29].

Appendix A. Raw Test Results by Run

*Not a valid run. Computer was erroneously set to Gauge Mode

** Not a valid run. Prior to run, the SEAC conservatism factor was adjusted and this dive was considered a decompression dive. Given that the computer categorized this as a decompression dive, the NFT time displayed conformed to the DAN guidelines and is consistent with the User’s Manual.