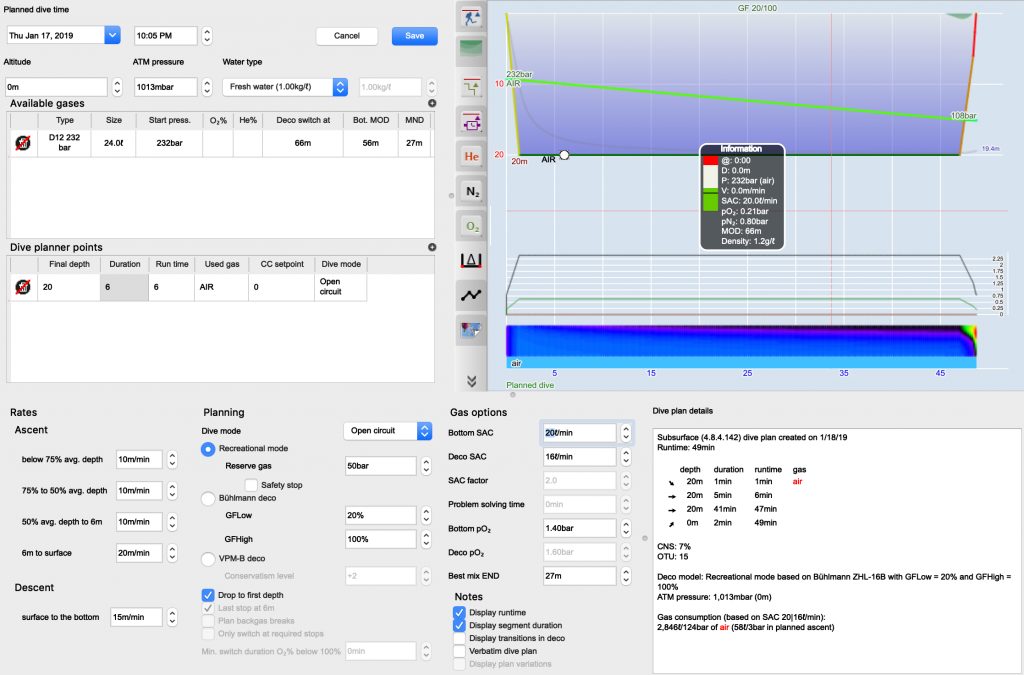

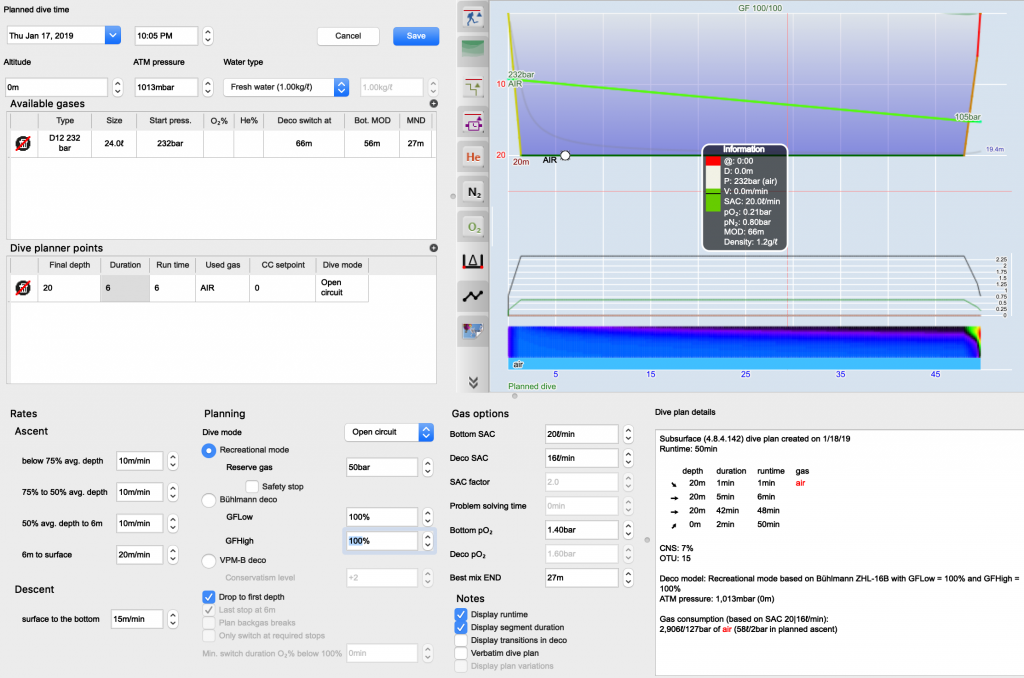

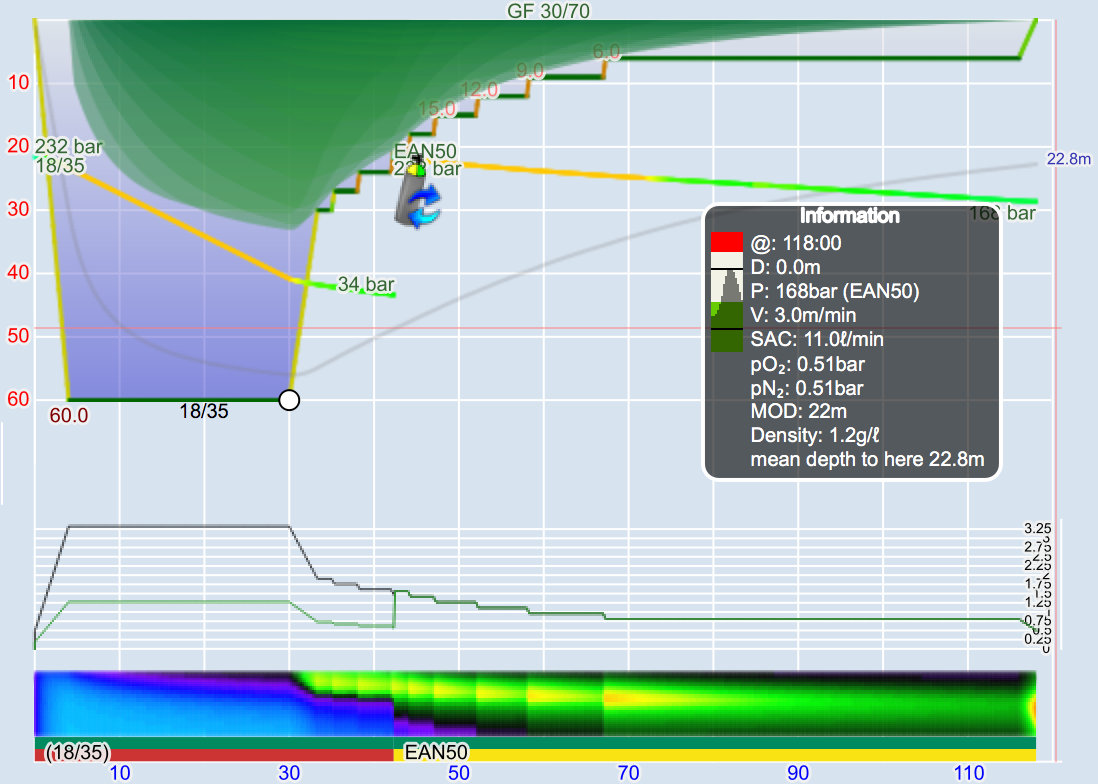

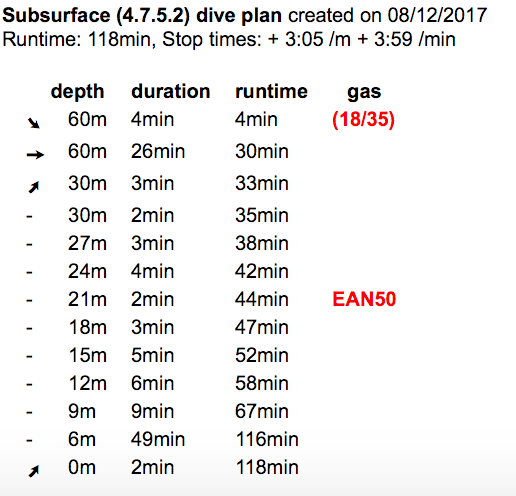

How good was the deco of my dive? For those of us who strive to improve their diving this is a valid question to find ways to optimise how we get out of the water. In Subsurface, for example, we give various information including the individual tissues’ ceilings as well as the heat-map.

Recently, on ScubaBoard, I learned about a new product on the market: O’Dive, the first connected sensor for personalised dives. It consists of Bluetooth connected ultrasound Doppler sensor together with an app on your mobile phone. The user has to upload the profile data of the dive to the Subsurface cloud from where the app downloads it and connects it with the Doppler data (for this, it asks for your Subsurface username and password?!?, the first place where you might ask if this is 100% thought through). Then it displays you a rating (in percent) for your deco and offers ways to improve it.

That sounds interesting. The somewhat ambitious price tag (about 1000 Euros for the sensor plus 1,50 Euro per dive analysed), however, prevented me from just buying it to take it for a test. And since it’s a commercial device, they don’t exactly say what they are doing internally. But in there information material they give references to scientific publications, mainly of one research group in southern France.

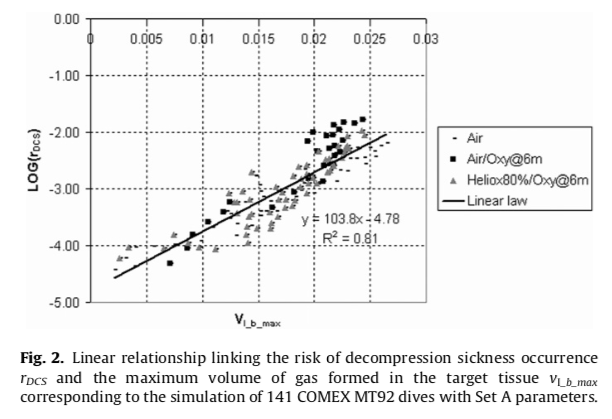

A fraction is to conference proceedings and articles in very specialised journals that neither my university’s library nor the Rubicon Archive not SciHub have access to, but a few of the papers I could get hold of. One of those is “A new biophysical decompression model for estimating the risk of articular bends during and after decompression” by J. Hugon, J.-C. Rostain, and B. Gardette.

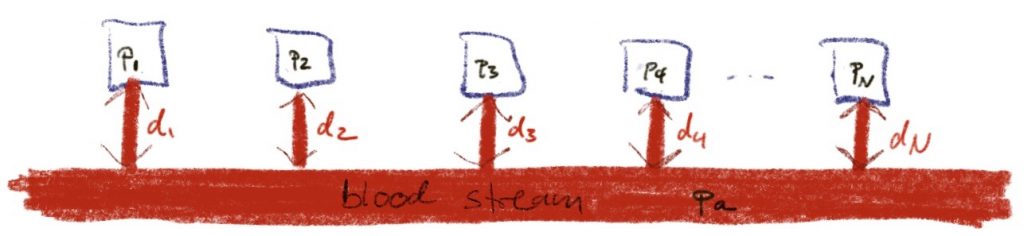

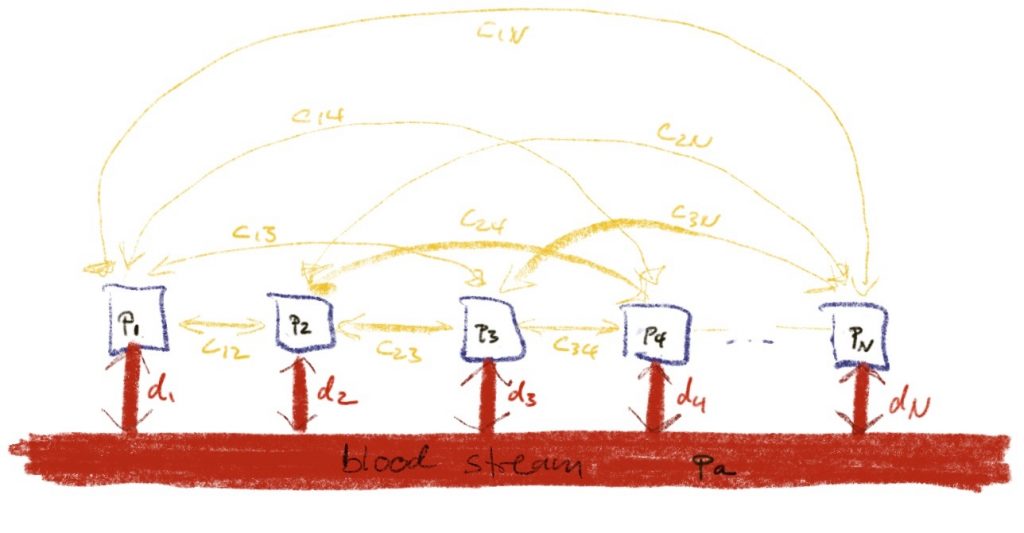

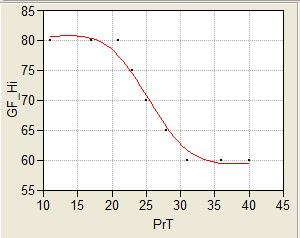

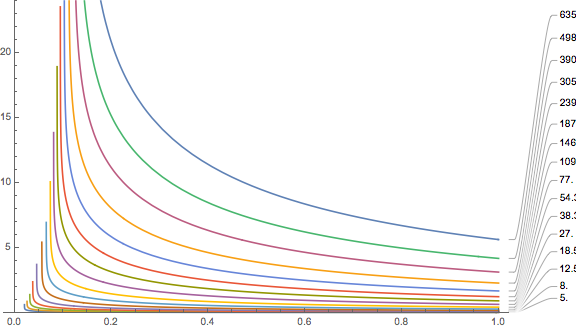

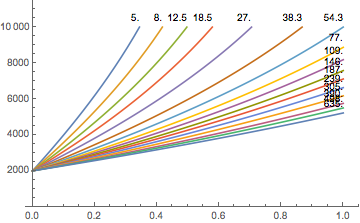

That one is clearly in the tradition of Yount’s VPM-B model (using several of the bubble formulas that I have talked about in previous posts). They use a two tissue model (fitting diffusion parameters from dive data) and find an exponential dependence of the risk of decompression on the free gas in one of the tissues:

Note, however, that the “risk of decompression sickness” is not directly measured but is simply calculated from the

\(PrT = P\sqrt t\)paramour (P is the ambient pressure in bar during the dive while t is the dive time in minutes, i.e. a characterisation of a dive profile compared to which a spherical cow in vacuum looks on spot, but this seems to be quite common, see also https://thetheoreticaldiver.org/wordpress/index.php/2019/06/16/setting-gradient-factors-based-on-published-probability-of-dcs/) using the expression

\(r_{DCS} = 4.07 PrT^{4.14}.\)which apparently was found in some COMEX study. Hmm, I am not convinced Mr. President.

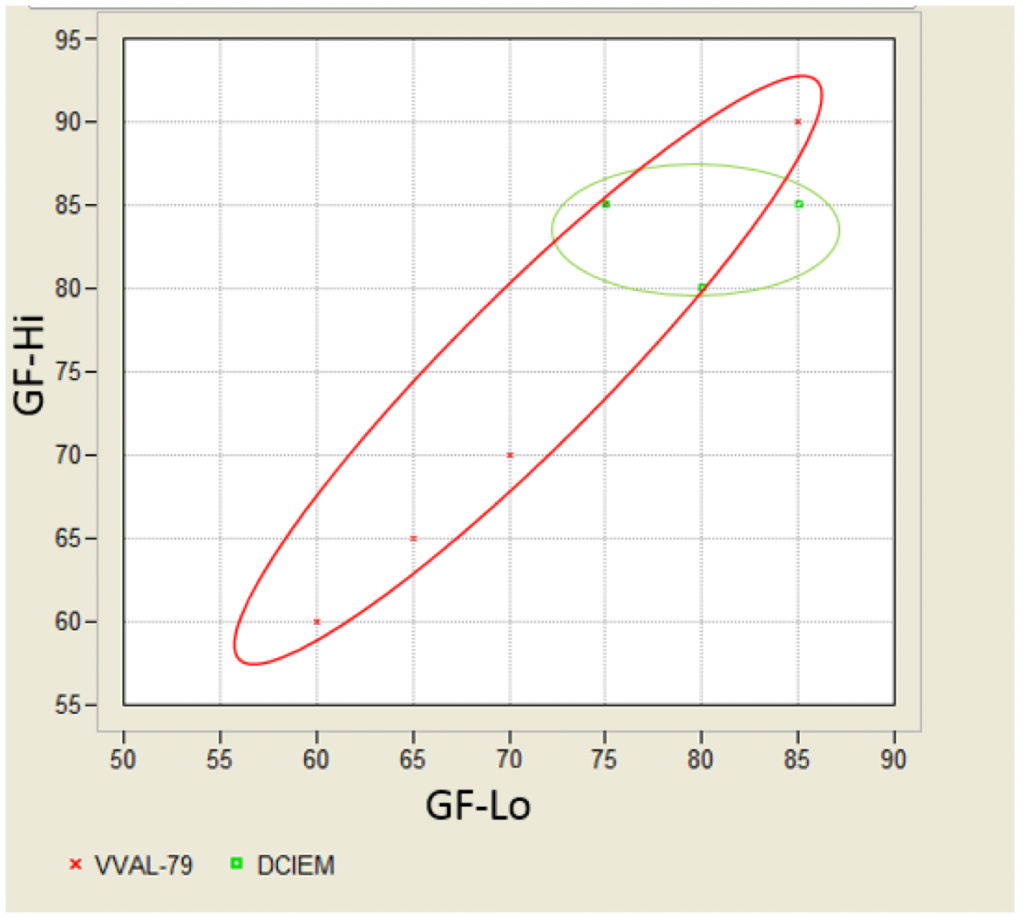

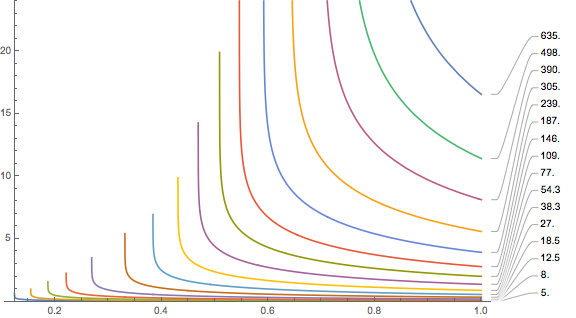

The second reference I could get hold of are slides from a conference presentation by Julien Hugon titled “A stress index to enhance DVS risk assessment for both air and mixed gas exposures” which sounds exactly like what the O’Dive claims to do.

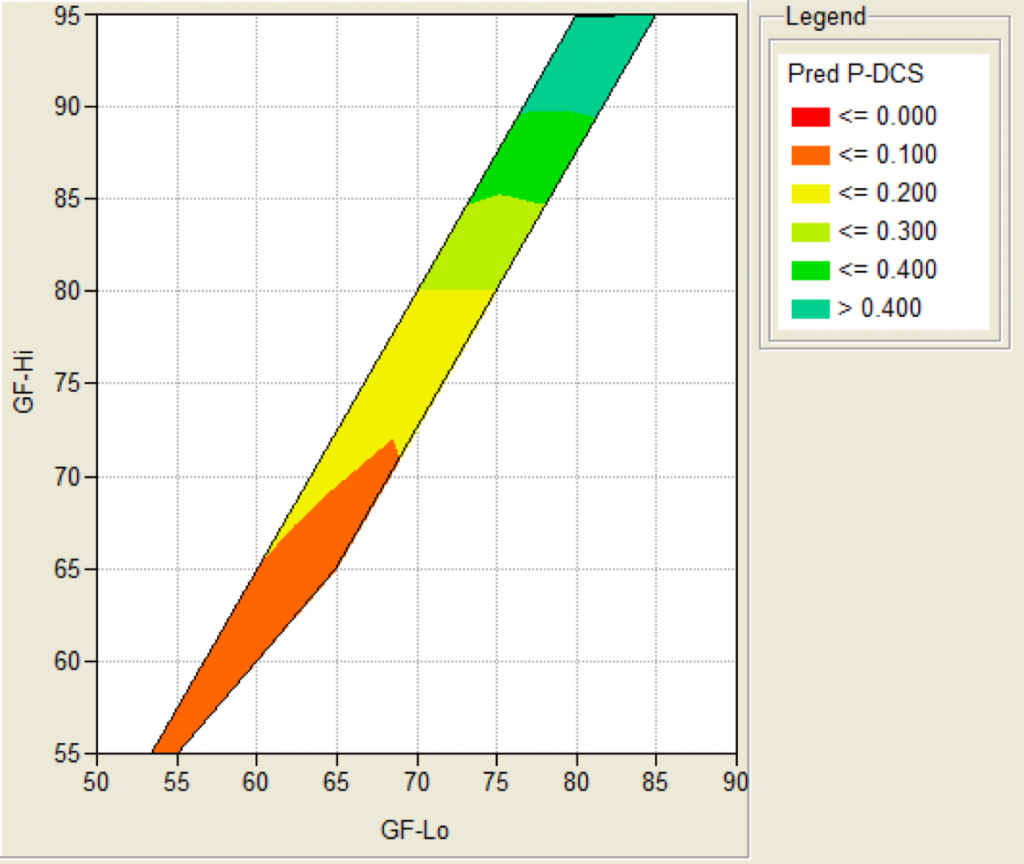

There, it is proposed to compute an index

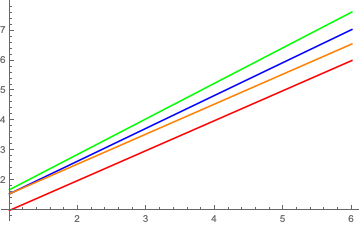

\( I= \frac{\beta (PrT-PrT^*)}{T^{0.3}} . \)Here PrT=12 bar sqrt(min), T the total ascent time and beta depends on the gas breathed: 1 for air, 0.8 for nitrox and 0.3 for trimix (hmm again, that sounds quite discontinuous). As you might notice, what does not go into this index is at which depths you spend your ascent time while PrT keeps growing with time indefinitely (i.e. there is no saturation ever).

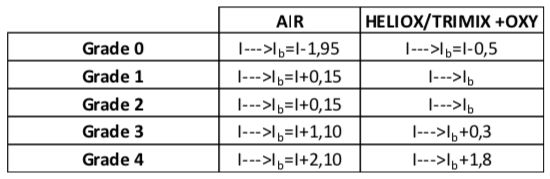

This index is then corrected according to a Doppler count (which is measured by a grade from 0 to 5):

This corrected index is then supposed to be a good predictor for the probability of DCS.

I am not saying that this is really what is going on in the app and the device, these are only speculations. But compared to 1000 Euro plus 1,50 Euro per dive, looking at ceilings and heat map in Subsurface sounds like quite good value for money to me.de